The Impact of Deepfake AI on Society and Technology

From manipulation of media to the making of counterfeit videos, Deepfake AI has raised new ethical questions for both society and technology. Learn more about its implications here!

Deepfake AI is a quickly evolving technology that has the potential to cause profound changes in our online world. From the creation of fake and often malicious media to the manipulation of digital content, deepfakes AI requires careful consideration of its ethical implications in order to protect society and its citizens from potentially harmful outcomes.

Deepfake technology is artificial intelligence (AI) that can manipulate images and videos to create realistic but fake content. While deepfakes have many potential uses, such as in the entertainment industry, they also raise concerns about their misuse.

- Deepfake pornography, which uses women's faces without consent, is a major issue.

- Deepfakes might spread disinformation and damage public trust in institutions, according to the US Department of Homeland Security.

- Deepfake technology can construct an artificial face, person, or object for fraud, espionage, or infiltration. Training a model with real faces without consent is unethical.

- What it is, how it works, and how they can be detected.

While deepfake technology offers improved capabilities for image manipulation, it also increases the scale for manipulation and bad actor intervention. The potential misuse of this technology raises significant ethical concerns that need to be addressed through harm frameworks, ethical AI principles, and commonsense regulations.

Understand Deepfake AI technology and its implications

In order to understand the implications of Deepfake AI, it is important to first understand what it is, and how it works. Deepfake AI consists of computer generated images and videos that appear almost indistinguishable from real life visuals. It is created using a form of artificial intelligence (AI) in which an algorithm can detect patterns in data, learn to mimic those patterns, and create new content. As a result, deepfakes have the potential to manipulate media, interfere with digital privacy and security, and disrupt democratic processes.

With deepfake AI, videos can be manipulated to make people appear as though they are saying or doing something that never actually happened. Deepfake AI technology can also be used to alter text, audio, images and other digital information, which can lead to misinformation campaigns and the spread of propaganda on social media platforms.

Furthermore, since deepfakes can often go undetected due to their convincing appearance, this technology poses a serious threat to personal privacy by making it easier for attackers to impersonate victims and carry out more effective identity thefts. It is important that everyone understand the potential implications of deepfake AI so we can learn how to protect ourselves against these malicious attacks.

What is deepfake?

Deepfake refers to artificial intelligence (AI)-generated video or audio portraying a person saying or doing things they never actually did. The term combines "deep learning" and "fake." Deep learning is a subset of machine learning, which involves training artificial neural networks to perform specific tasks.

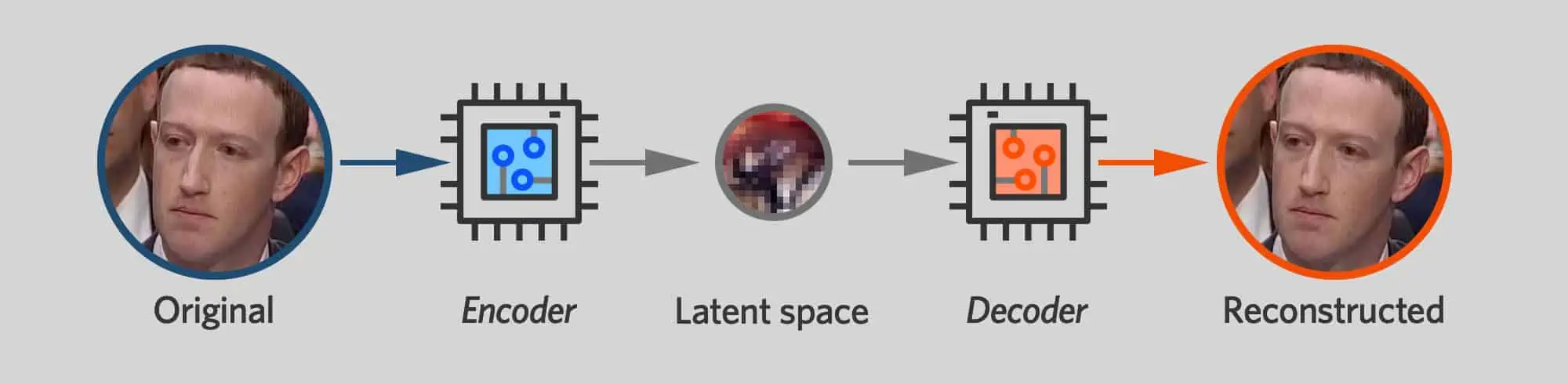

Deepfake technology uses machine learning called "generative adversarial networks" (GANs), which pits two neural networks against each other to create a convincing fake. One network, known as the "generator," creates the fake video or audio, while the other, the "discriminator," attempts to identify whether it is fake or real. This process is repeated until the generator can create a deepfake that the discriminator cannot distinguish from reality. They fall into Generative AI category.

Deepfakes have raised concerns about their potential use in spreading misinformation, disinformation, or propaganda. For example, they could be used to create fake news, manipulate political campaigns, or even defame individuals by making them appear to say or do things they did not. However, deepfake technology has some positive applications, such as in the entertainment industry or improving virtual reality experiences.

How does it work?

Deepfakes work by using advanced machine learning algorithms to generate a fake video or audio that appears to be real. The process involves training a neural network on a large dataset of images and/or audio recordings of the person the deepfake intends to impersonate.

The neural network is trained to analyze the facial expressions, movements, and speech patterns of the person in the dataset and then create a new image or audio clip that closely resembles them. This is achieved through a process called "generative adversarial networks" (GANs), which involves two neural networks working together to generate the fake content and detect whether it is fake or real.

Once the deepfake has been generated, it can be edited and refined using software tools to make it look more convincing. The final result can be a video or audio clip that appears genuine but is entirely fabricated.

While deepfakes can be used for harmless fun or entertainment, there are concerns that they could be used for malicious purposes, such as spreading fake news or manipulating public opinion. Therefore, it is crucial to be cautious and critical of our media, especially if it seems too good (or bad) to be true.

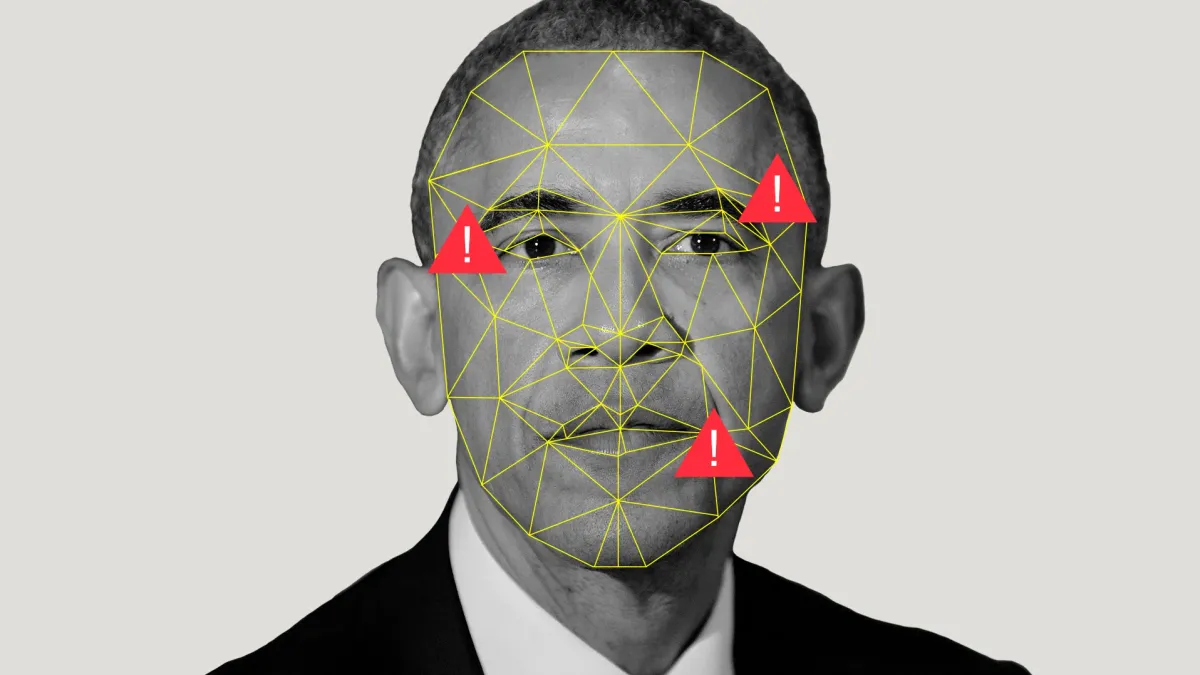

Example: Deepfake Video of Obama - Courtesy USA Today

Identify the major concerns related to Deepfake AI

The major concerns related to Deepfake AI are the potentials to manipulate media, disrupt digital privacy and security, and interfere with democratic processes. Moreover, deepfakes can be used to spread false information or create counterfeit videos that can misrepresent an individual or organization’s true intentions or identities. Furthermore, there are also questions about who should be liable for any illegal content created using deepfake AI.

According to researchers, deepfake technology presents a serious risk of spreading misinformation and false information that can be difficult to detect. In addition, the proliferation of deepfakes has raised questions about how AI should be used responsibly and ethically. Moreover, there are also worries regarding the potential for misuse and abuse of personal data or images due to Deepfake AI. As a result, it is essential for both governments and technology companies to develop appropriate legislation that regulates Deepfake AI before significant damage is done by malicious actors.

Consider the improvement of technologies that detect deepfakes

It has become increasingly necessary to develop technologies that can detect deepfakes in a timely and accurate manner. These detection methods need to be able to identify manipulated media or counterfeited videos quickly in order to mitigate any damage caused by the illegal use of deepfake AI. In addition, companies and organizations should also consider investing in measures which prevent any attempts of using deepfakes for malicious purposes, such as artificial intelligence (AI) systems that monitor social media for Deepfake AI-generated content.

AI systems are used to learn and recognize the characteristics of manipulated media, which can help in detecting deepfakes early. In addition, organizations can also look into information validation and self-auditing tools that work by cross-referencing data from multiple sources automatically. This type of verification system allows companies to verify whether the data they receive is legitimate or not. Furthermore, organizations should also be aware of how easy it is for people to spread deepfakes with minimal effort, and measures need to be taken to reduce the number of deepfake videos. For example, social media networks should develop filter systems that detect any suspicious media before going live in order to minimize public exposure to false content. Ultimately, investing in technologies that can detect deepfakes successfully will go a long way towards preventing their illegal use.

How to detect?

Detecting deepfakes can be challenging because they are designed to be convincing and difficult to distinguish from real content. However, several methods can be used to detect deepfakes, such as:

- Forensic analysis: This involves analyzing the video or audio file for signs of tampering or manipulation, such as inconsistencies in the lighting, shadows, or reflections or artifacts in the image or sound that indicate it has been digitally altered.

- Facial and speech recognition: Software can be used to analyze the facial expressions, movements, and speech patterns in the video or audio clip and compare them to a known dataset of the person being impersonated. Any discrepancies or anomalies can indicate that the content is a deepfake.

- Metadata analysis: The metadata, or data that is embedded in the file, can provide clues about its authenticity, such as the location, date, and time it was created or modified.

- Reverse image search: By conducting a reverse image search, it is possible to identify whether the images used in the deepfake have been taken from other sources or manipulated in any way.

- Blockchain technology: Some companies use blockchain technology to create an immutable record of the original content, making it easier to detect deepfakes by comparing them to the source.

Are there any tools?

Yes, there are several tools available that can help detect deepfakes, such as:

- Deepware Scanner: This web-based tool uses artificial intelligence to analyze images and videos and detect deepfakes. It analyzes the visual cues and metadata to determine whether the content is authentic or manipulated.

- Sensity AI: This AI-powered platform uses advanced computer vision and machine learning algorithms to detect deepfakes in images and videos. It can identify manipulation techniques used in deepfakes, such as face swapping, lip-syncing, and voice cloning.

- Amber Authenticate: This tool uses blockchain technology to verify the authenticity of digital content, including images and videos. It creates an immutable record of the original content, making detecting tampering or manipulation easier.

- Reality Defender: This software tool uses machine learning to detect deepfakes in videos. It analyzes the video's facial movements and speech patterns to determine whether they are consistent with the person being impersonated.

- Deeptrace: This is an AI-powered platform that can detect deepfakes in images and videos. It uses computer vision and machine learning to analyze the visual cues and metadata in the content and determine whether it is authentic or manipulated.

It's important to note that these detection methods or tools are not foolproof and may not be able to detect all types of deepfakes, as the technology is constantly evolving. Therefore, it's important to be vigilant and critical when consuming media and always verify the source of any information before accepting it as true.

Examine the legal framework surrounding deepfakes

In order to minimize the risks associated with deepfakes, it is important for governments and institutions to develop a legal framework for regulating their use. This includes providing guidelines and regulations that clearly state any potential legal penalties for creating or using Deepfake AI-generated content. Furthermore, people need to be made aware of the potential consequences of using such technologies as well as how they can protect themselves from malicious actors who seek to exploit deepfakes for illegal activities.

As with other forms of AI-generated content, deepfakes should be banned or regulated in certain cases, such as when they are used to defame or slander another person or used in fraudulent practices. Furthermore, it should be made illegal to distribute deepfakes without clearly indicating that the content is not a real recording. Additionally, safeguards should be put in place to detect and track deepfakes for malicious use. Finally, individuals who create and distribute deepfakes should face legal repercussions for their actions as this would discourage malicious actors from creating and distributing them.

Analyze what organizations can do to protect themselves from abuse of deepfake technology

Organizations can take a variety of steps to protect themselves from abuse of deepfake technology. For one, they should educate staff on the dangers of using or creating Deepfakes and establish policies outlining consequences for misuse of the technology. In addition, organizations should develop a strategy for detecting and disrupting any deepfakes used against them, as well as investigate and report any attempts to abuse deepfakes in order to protect their interests. Additionally, organizations can limit access to software or hardware capable of creating Deepfakes when warranted.

Organizations should also audit their networks regularly and employ best practices such as strong passwords, two-factor authentication and encryption protocols. In addition, they should monitor online activity within the organization, including employee use of social media to ensure they are not engaging in activities that would create or promote deepfakes. Organizations should also utilize existing technology to flag any suspicious content in order to identify any potential deepfake creations early on. Finally, organizations can consult with experts to better understand Deepfakes and how they can be used as a tool for malicious purposes before creating a robust response plan.

What is the government doing?

Governments worldwide are taking steps to address the issue of deepfakes and their potential impact on society. Some of the measures being taken include:

- Legislation: Several countries, including the United States, Canada, and Australia, have passed or proposed legislation that would criminalize the creation and distribution of deepfakes for malicious purposes.

- Funding for research: Governments are investing in research to develop new technologies and methods for detecting and combating deepfakes. For example, the U.S. Defense Advanced Research Projects Agency (DARPA) has launched a program to develop new tools for detecting deepfakes.

- Awareness campaigns: Governments are also launching public awareness campaigns to educate people about the dangers of deepfakes and how to identify them. These campaigns often involve working with social media platforms and other technology companies to promote media literacy and critical thinking skills.

- International cooperation: Some governments are working together to address the issue of deepfakes on a global scale. For example, the G7 countries issued a joint statement in 2019 calling for increased cooperation and information sharing to combat deepfakes.

Addressing the issue of deepfakes is a complex and ongoing challenge that requires a multifaceted approach. While governments have a role to play, it is also essential for individuals, social media platforms, and technology companies to take responsibility and work together to mitigate the risks associated with this technology.

In USA, Europe, China

In the United States, several bills have been introduced in Congress to address the issue of deepfakes, including the Malicious Deep Fake Prohibition Act, the Deepfake Report Act, and the Defending Each and Every Person from False Appearances by Keeping Exploitation Subject to Accountability Act (DEEP FAKES Accountability Act). These bills propose various measures, such as criminalizing the creation and distribution of deepfakes for malicious purposes and requiring social media platforms to disclose the origin of political advertisements.

In the European Union, the Digital Services Act and the Digital Markets Act have been proposed, which aim to regulate online platforms and prevent the spread of harmful content, including deepfakes. The proposed regulations require online platforms to take measures to detect and remove illegal content, including deepfakes, and provide transparency in their content moderation practices.

Other countries, such as China, have also introduced laws and regulations to prevent the spread of deepfakes, including criminalizing the creation and distribution of deepfakes for malicious purposes.

Across the board, the issue of deepfakes is complex, and there is still much to be done to address it effectively. As a result, we will likely continue to see new laws and regulations introduced, as well as increased investment in technologies to detect and prevent deepfakes in the coming years.

Stay vigilant and safe.